Classifying humans by movement

For a robot moving in public spaces, planning an appropriate path from one place to another is only part of the story. Path planning as an exercise is well understood. However, people in the environment present an additional layer of complexity for any robot path planning.

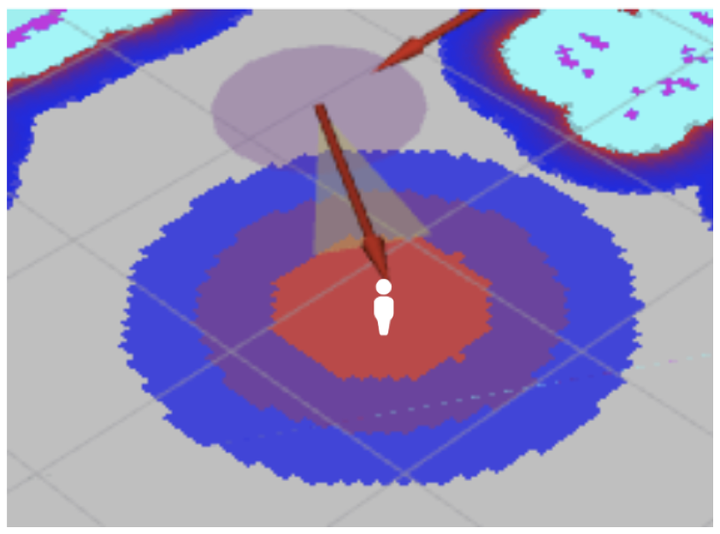

Prior research indicates that the speed and direction of movement, both of people and of the robot, are important factors in human-robot interaction. We want to investigate the detection and subsequent classification of the movements of people around the robot.

There are several stages of experimentation:

- Can we use existing people detection techniques to identify people in proximity to the robot

- Or implement our own, low-cost methods for people detection based on movement around the robot

- Can we combine techniques such as face detection, leg detection and movement detection to consistently, and speedily, populate a data structure of known people around the robot

- Can we ‘classify’ people around the robot, into movement types such as wandering or stationary.

- and into group types (single, doubles, groups)

Our goal is to classify the behavior of people around the robot based on their movement. Our aim is to determine what level of classification is important to the range of social tasks the robot is attempting. For example, the work of Kanda et al. determines which people are best to approach to present promotional material. Rather than such task-specific classification, we propose a higher-level classification using labels such as “hurrying”, “standing”, “sitting”, and “meandering”, in conjunction with gross level directional labels, such as “toward”, “away”, and “crossing”. It is our contention that these labels on human movement will be sufficient for higher level processes to make a determination of the best people to approach, or people who are likely to approach the robot themselves, such that the robot can prepare for such an event by turning toward the person, for instance.

For in-lab scenarios, we can cross-reference models of detection of people with our motion capture data, as ground truth data.

References

Takayuki Kanda, Dylan F. Glas, Masahiro Shiomi, Hiroshi Ishiguro, and Norihiro Hagita. Who will be the customer?: a social robot that anticipates people’s behavior from their trajectories. In Proceedings of the 10th international conference on Ubiquitous computing, UbiComp ’08, pages 380–389, New York, NY, USA, 2008. ACM.