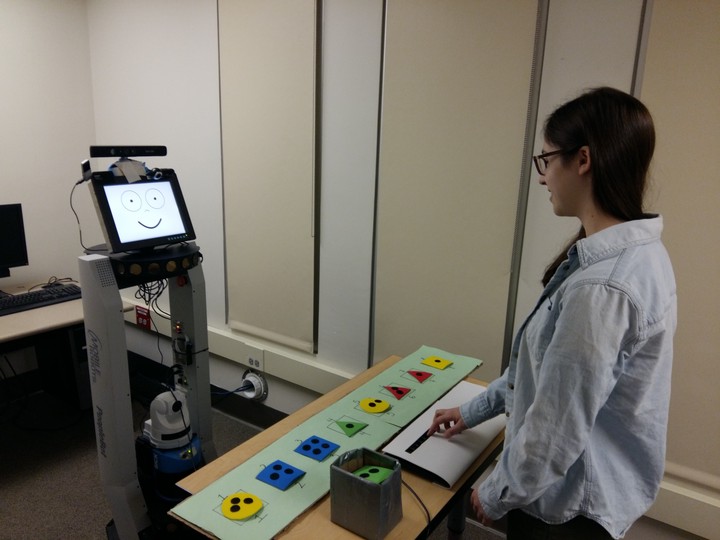

Robot Face

The face the robot displays assists in robot communication, using both traditional and non-traditional mechanisms. For instance, can can control eye gaze direction, and facial expression, but we can also supplement those modalities with novel techniques such as color or sound to communicate robot intent.

We will study human reactions to the face in online mechanical turk experiements, then under lab conditions, using both human observation and taking advantage of sub-millimeter tracking of head and body orientation using our high-precision motion capture camera system, and then finally seeing how people respond to the robot in the wild. When the robot is giving directional instructions, or talking about specifically placed, spacial objects, we can see how users react to head or eye movements given by the robot. For instance, when directing users to a particular door, and indicating which door using a head or eye gesture, we can track the users movements with extreme accuracy. We can use this to determine if people either consciously or subconsciously follow the movements of the robot’s head, eyes or both.

Resources

References

Sean Andrist, Tomislav Pejsa, Bilge Mutlu, and Michael Gleicher. Designing effective gaze mechanisms for virtual agents. In Proceedings of the SIGCHI conference on Human factors in computing systems, pages 705–714, 2012.

Kim Baraka, Stephanie Rosenthal, and Manuela Veloso. Enhancing Human Understanding of a Mobile Robots State and Actions using Expressive Lights. In Proceedings of RO-MAN’16, the IEEE International Symposium on Robot and Human Interactive Communication, 2016.

Aaron Cass, Kristina Striegnitz, Nick Webb (2018). A Farewell to Arms: Non-verbal Communication for Non-humanoid Robots. Workshop on Natural Language Generation for Human- Robot Interaction at the 11th International Conference on Natural Language Generation (INLG 2018).